Adoption of Real-Time Machine Learning for Cyber Risk Assessment in IoT Environments

The Internet of Things (IoT) has emerged in the past as Internet extension and significantly changed our world. A tremendous amount of IoT applications has highly eased people’s daily lives and improved resource usage and allocation, e.g., power bank sharing, bike sharing, etc. However, the ingrained openness of underlying wireless systems renders these IoT entities vulnerable to a broad range of cyber risks, e.g., vulnerabilities of spectrum that can be a source of adversarial inference.

Moreover, as our reliance on wireless/connected devices and radios has also been dramatically increasing, public safety, business operations, socializing and navigation as well as critical national communication infrastructures have become more vulnerable to cyber threats. Hence, policymakers and industries have recently begun to perceive that the expansion of connected devices and their cyber susceptibility results in a large malicious and inferential risk. As a result, there is a need for identifying and comprehending these risks in order to elaborate efficient security solutions. Lastly, the economic impact of IoT devices and associated security vulnerabilities are further growing by artificial intelligence (AI) integration into human-computer interaction (HCI), e.g., banking, insurance, etc. Consequently, cyber risks are growing in term of both frequency and acuteness.

Why Real-Time Machine Learning (RTML)?

Exploration and analysis of data volumes of IoT entities, including, but not limited to ubiquitous cameras and sensors, is bountiful. The overflow of such data enables a grand scope for exploring the associated cyber risks, especially with the assistance of machine learning mechanisms. Unlike traditional machine learning, where the Cloud is an option for integrating its modular application, real-time machine learning (RTML), aka online or incremental learning, can be of a great potential herein as the cloud-centric IoT environments are expanding following data movement and overhead (e.g., energy concerns). Therefore, it can be advantageous to leverage reliable IoT entities to conduct various inference operations, instead of constantly transferring massive amounts of raw data to the cloud. Additionally, the non-dynamic nature of machine learning model training can be inefficient in processing dynamic IoT data in-situ systems, and thus weak accuracy of predictions may occur. Furthermore, another insightful benefit in deploying IoT-enabled cyber threats detectors using RTML is the low power platform and fast prototyping.

Model Visioning

As a branch of machine learning, RTML is capable of enhancing the classification and prediction accuracy in malware and cyber risks detection based upon learners, whose outputs can be integrated for alert decision. Here, ensemble learning and joint decision process, if integrated, present another opportunity to enhance the decision accuracy of RTML classifiers and assist with devising learning techniques while guaranteeing better generalization performance.

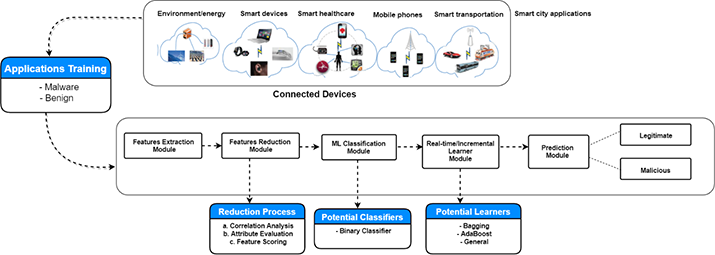

The key idea behind designing an efficient IoT-enabled threat detector using RTML is based upon identifying appropriate features to describe raw data. As depicted in Figure 1, a feature reduction module can be exploited after the feature extraction stage to cut down the number of low-level features. Here, an examination of the correlation attribute can be used on the training dataset to identify essential architectural parameters and different characteristics of applications. Building upon this, a feature scoring technique can be used to score the categorized features by their relevance to the detector target variable.

Serving the Predictions

So far, only incremental learning models are being considered, but we also want to serve real-time predictions. A recommendation here is to use a linear learner. To make a prediction, only the current context and the parameters we have learned are needed (i.e., no need to know the parameters, architecture of the network including the choice of activation functions). In a typical Redis use case scenario, the linear learner can learn on examples through the Redis FIFO queue, it can then periodically save the model parameters back into Redis. The key point here is that saving (and indeed retrieving) the parameters should be an atomic operation (e.g., using the SET/GET commands in the case of Redis example). For more complex interactions, a locking mechanism may be used, whereas the key requirement is that all model parameter changes are updated in real-time to avoid wrong or low accuracy predictions.

Figure 1: Real-time machine learning-based cyber risk detection framework.

It is sensible to separate the logic of training and inference. Assuming the model parameters are updated atomically, serving up predictions takes place as follows; (1) preprocess the context to make it suitable for inference, (2) retrieve model parameters atomically, (3) calculate the linear combination of model weights and context features, and (4) return result. Another key reason for using an in-memory store becomes apparent as we look at the logic here: for every prediction request, we need to retrieve new model parameters, since they may change rapidly. A traditional relational database solution is likely to be slow for such online learning purposes.

Future Directions and Trends: Flexibility Comes at a Price

As IoT applications have become more diverse today in evolving smart cities, RTML can be exploited in IoT environments to improve their reliability and security in particular. Existing machine learning and deep learning solutions are computationally and energy expensive. A grand challenge here is how to merge or integrate existing solutions of traditional machine learning into an incremental online learning/RTML to boost the overall accuracy of cyber risk detection. A combination of these mechanisms can guarantee a balance between the cost of energy and computation and accuracy of detection results which will be a vital assurance for next-generation IoT systems. Consequently, the integration of RTML in such systems will require overhauling the entire stack of communication within the application and physical layers. Lastly, traditional security threats (e.g., malware) detection and prevention mechanisms, such as signature and/or semantics-based detectors, are software solutions and result in a notable computational cost and overhead. Therefore, threat detection mechanisms could be improved by deploying simplified classifiers regardless of the detection approach is being deployed.

Mohamed Rahouti received the M.S. degree and Ph.D. degree from the University of South Florida in the Mathematics Department and Electrical Engineering Department, Tampa, FL, USA, in 2016 and 2020, respectively. He is currently an Assistant Professor, Department of Computer and Information Sciences, Fordham University, Bronx, NY, USA. He holds numerous academic achievements. His current research focuses on computer networking, software-defined networking (SDN), and network security with applications to smart cities.

Mohamed Rahouti received the M.S. degree and Ph.D. degree from the University of South Florida in the Mathematics Department and Electrical Engineering Department, Tampa, FL, USA, in 2016 and 2020, respectively. He is currently an Assistant Professor, Department of Computer and Information Sciences, Fordham University, Bronx, NY, USA. He holds numerous academic achievements. His current research focuses on computer networking, software-defined networking (SDN), and network security with applications to smart cities.

Moussa Ayyash (M'98–SM'12) received his B.S., M.S., and Ph.D. degrees in Electrical and Computer Engineering. He is currently a Professor at the Department of Mathematics and Computer Science, Chicago State University, Chicago. He is the Director of the Center of Information and Security Education and Research (CINSER). His current research interests span digital and data communication areas, wireless networking, visible light communications, network security, Internet of Things, and interference mitigation. Dr. Ayyash is a member of the IEEE Computer and Communications Societies and a member of the Association for Computing Machinery. He is a recipient of the 2018 Best Survey Paper Award from the IEEE Communications Society.

Moussa Ayyash (M'98–SM'12) received his B.S., M.S., and Ph.D. degrees in Electrical and Computer Engineering. He is currently a Professor at the Department of Mathematics and Computer Science, Chicago State University, Chicago. He is the Director of the Center of Information and Security Education and Research (CINSER). His current research interests span digital and data communication areas, wireless networking, visible light communications, network security, Internet of Things, and interference mitigation. Dr. Ayyash is a member of the IEEE Computer and Communications Societies and a member of the Association for Computing Machinery. He is a recipient of the 2018 Best Survey Paper Award from the IEEE Communications Society.

Sign Up for IoT Technical Community Updates

Calendar of Events

IEEE 8th World Forum on Internet of Things (WF-IoT) 2022

26 October-11 November 2022

Call for Papers

IEEE Internet of Things Journal

Special issue on Towards Intelligence for Space-Air-Ground Integrated Internet of Things

Submission Deadline: 1 November 2022

Special issue on Smart Blockchain for IoT Trust, Security and Privacy

Submission Deadline: 15 November 2022

Past Issues

September 2022

July 2022

March 2022

January 2022

November 2021

September 2021

July 2021

May 2021

March 2021

January 2021

November 2020

July 2020

May 2020

March 2020

January 2020

November 2019

September 2019

July 2019

May 2019

March 2019

January 2019

November 2018

September 2018

July 2018

May 2018

March 2018

January 2018

November 2017

September 2017

July 2017

May 2017

March 2017

January 2017

November 2016

September 2016

July 2016

May 2016

March 2016

January 2016

November 2015

September 2015

July 2015

May 2015

March 2015

January 2015

November 2014

September 2014