An Architecture for IoT Analytics and (Real-time) Alerting

Internet of Things (IoT) applications poses many challenges in different research fields like electronics, telecommunication, computer science, statistics, etc. In this article, we focus our attention on the so-called “IoT Analytics” that can be defined as the set of approaches and tools used to extract value from IoT data.

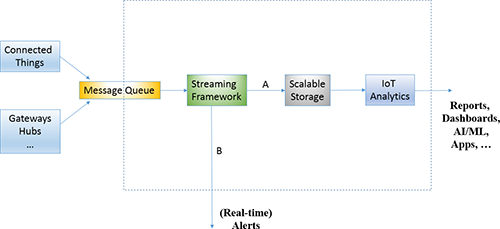

Of course, the meaning of the word “value” may vary, depending on the specific application domain (e.g., optimization of a production line to save time and money, increasing revenues thanks to new services, new business models, etc.). After describing one of the use cases that helped us to define the architecture depicted in Figure 1, we will discuss some of the technologies that can be used to actually implement a platform for IoT Analytics whose many advantages are:

- increase data retention, thanks to the usage of scalable systems like Hadoop, Cassandra, etc. In such a way the whole set of data coming from physical devices is available to data scientists and data analysts;

- generate detailed reports about equipment usage, customer habits, etc. starting from collected data;

- carry out in-depth analyses on gathered data to predict future failures, utilization peaks, etc.;

- apply the advanced models defined by data scientists in conjunction with streaming technologies like Apache Spark Streaming, Apache Flink, etc. This feature enables the implementation of (near-real time) altering systems.

Use case: Industry 4.0

We propose a general architecture for IoT Analytics that can be used both in consumer as well as industrial scenarios but, in this section, we describe how it can be used in the Industry 4.0 domain (a.k.a., Industrial IoT - IIoT) to detect events of interest, anomalies, etc. on a production line. In order to do so, the following approaches can be used:

- apply static rules defined by looking at what happened in the past. In this case, analyzing data about how the production line performed in the past can help human operators to define static rules (e.g., “if machine B stops for more than 3 minutes and machine C for more than 5 minutes, then machine A is probably broken”) that are able to detect the events of interest. This approach is effective in many cases, but it is not optimal because it is able to describe only the simple “if-then” logic. Moreover it is not adaptive in case of changes;

- Apply Artificial Intelligence/Machine Learning (AI/ML) techniques to identify the events of interest. The usage of advanced analytics techniques allows the identification of more complex logics that goes beyond the simple “if-then” approach. Furthermore, AI/ML enables the identification of patterns that were unknown to the operator and can self-adapt as conditions changes. AI/ML models are generated off-line (i.e., on the historical data gathered in the past) but, once defined, they can be applied in near-real time to respond quickly. In particular, we deployed our models in a centralized system where the application of AI/ML models to incoming data flows takes place in near real-time thanks to the presence of a streaming framework (i.e., Apache Spark Streaming). A generic discussion about where such models should be deployed (i.e., Edge vs. Fog vs. Cloud Computing) is out of the scope of this article, as we focus on the solution that we deployed in real world scenarios.

Architecture and Technologies

In this section, we describe the architecture that we defined for IoT use cases and some of the technologies that we used in real world deployments.

Figure 1: The architecture for IoT Analytics.

Figure 1: The architecture for IoT Analytics.The data received by the Message Queue system is forwarded to the Streaming Framework, which is a system optimized for the processing of data flows. This component is supposed to perform two main actions, namely:

- ETL (Extract Transform Load) operations: data coming from the physical devices need to be pre-processed to make them suitable for storage (see letter A in Figure 1). Typical operations performed by the Streaming frameworks are data filtering, data enrichment, format changes, aggregations (e.g., aggregate data according to a specified time window), etc.

- Apply AI/ML models on the fly: the algorithms (e.g., classification, regression, anomaly detection, forecasting, etc.) defined off-line by data scientists are deployed on the streaming framework to be applied as soon as new data reach the platform. Depending on the AI/ML result, this component may decide to fire alters in (near) real time (see letter B in Figure 1). Keeping in mind that the platform aims at generating value from the data, we can state that automated generation of (near) real time alerts is the first concrete example of “value generation”.

The rightmost component of Figure 1 is the IoT Analytics tool, i.e., a software typically used by analysts and data scientists to process (e.g., to define an AI/ML model) and visualize (e.g., to create a report) data. The insights that can be obtained from IoT data by means of IoT Analytics tools represents another example of “value generation”.

Regarding the technological landscape, for each component showed in Figure 1 a lot of different tools, both open source as well as proprietary, are available. Due to the lack of standardization and to the limited maturity of the IoT field, we remark the fact that nowadays many IoT deployments are tailored-made. Taking into account that every new project may require different approaches depending on the specific situation, it is important to be familiar with a multitude of technologies.

- Message Queue: Apache Kafka[1] is a distributed MQ system that is widely used, but it is very common to use also MQTT-based frameworks like Mosquitto[2].

- Streaming framework: Apache Spark Streaming[3] is the Spark component for distributed processing of data flows. This is usually deployed in conjunction with Spark SQL for ETL and Spark MLlib for real-time Machine Learning.

- Storage: even though we draw just one block in the architecture, it is common to store data on multiple systems at the same time. For example, HDFS (Hadoop Distributed FileSystem[4]) is a common choice for long-term data memorization where it is possible to execute massive analyses (e.g., Apache Parquet[5] is a very optimized format for SQL queries). Elasticsearch[6] is a distributed search engine providing efficient search capabilities and analytics. It is possible to deploy both these frameworks to get the best of the two worlds (i.e., fast searches and massive computations).

- IoT Analytics: this is a very crowded field, where we can find ad-hoc solutions, traditional business intelligence tools adapted for IoT/Big Data, and Big Data-native solutions. For instance, we developed our own end-to-end Big Data-native solution called Doolytic[7], which is very flexible because it can be used by both data scientists (through the Notebook Computing integration) and by not-skilled users (through a user-friendly interface).

In this article, we described out approach to IoT Analytics. It is still too early to understand how a “standard” architecture for IoT Analytics will look like, but we think that capabilities like streaming, real-time alerting, real-time reporting, scalable storage, etc. represent important building blocks in the IoT systems of tomorrow. In the future, we will investigate the introduction of Deep Learning frameworks, like Tensorflow[8] to deploy more advanced algorithms (e.g., autoencoders for anomaly detection) and/or the usage of Elasticsearch built-in Machine Learning capabilities.

[1] https://kafka.apache.org/

[2] https://mosquitto.org/

[3] https://spark.apache.org/streaming/

[4] http://hadoop.apache.org/

[5] https://parquet.apache.org/

[6] https://www.elastic.co/products/elasticsearch/

[7] http://doolytic.com/

[8] https://www.tensorflow.org/

Michele Stecca received his master’s degree in Software Engineering from the University of Padova and his Ph.D. from the University of Genova. He worked as a researcher at the ICSI in Berkeley, CA before joining Horsa Group as IoT & Big Data Consultant. He has participated in important projects co-financed by the EU in these areas for Telefonica, Telecom Italia, FIAT, Atos and Siemens (in the IoT field he has been involved in the EU FP7 collaborative project iCore www.iot-icore.eu). Dr. Stecca has authored numerous articles for international industry publications, presented at many international events and has served as an Adjunct Professor at the Universities of Genova and Padova. For more information see: https://sites.google.com/site/steccami/

Michele Stecca received his master’s degree in Software Engineering from the University of Padova and his Ph.D. from the University of Genova. He worked as a researcher at the ICSI in Berkeley, CA before joining Horsa Group as IoT & Big Data Consultant. He has participated in important projects co-financed by the EU in these areas for Telefonica, Telecom Italia, FIAT, Atos and Siemens (in the IoT field he has been involved in the EU FP7 collaborative project iCore www.iot-icore.eu). Dr. Stecca has authored numerous articles for international industry publications, presented at many international events and has served as an Adjunct Professor at the Universities of Genova and Padova. For more information see: https://sites.google.com/site/steccami/

Sergio Fraccon is an MBA IT Leader with 20 years experience in National and Multinational companies on both side demand and offering, focused into the business process realignment to the company strategy, using IT as enabler. Thanks to these experiences he has developed international skill and experience of management of international projects and team in different country and different industry (from Manufacturing and logistics, to Fashion). He is today Director of Business Analytics Data Strategy Unit at Horsa Group, taking care of Data Intensive environment that has analytics pervasive not only as Decision Support System but already integrated in the daily operational process. His main goal is to spread technologies like Big Data, Machine Learning and Artificial Intelligence in practice and solutions for all kinds of company size, making it accessible not only to the big organization but also to the mid-sized company.

Sergio Fraccon is an MBA IT Leader with 20 years experience in National and Multinational companies on both side demand and offering, focused into the business process realignment to the company strategy, using IT as enabler. Thanks to these experiences he has developed international skill and experience of management of international projects and team in different country and different industry (from Manufacturing and logistics, to Fashion). He is today Director of Business Analytics Data Strategy Unit at Horsa Group, taking care of Data Intensive environment that has analytics pervasive not only as Decision Support System but already integrated in the daily operational process. His main goal is to spread technologies like Big Data, Machine Learning and Artificial Intelligence in practice and solutions for all kinds of company size, making it accessible not only to the big organization but also to the mid-sized company.

Sign Up for IoT Technical Community Updates

Calendar of Events

IEEE 8th World Forum on Internet of Things (WF-IoT) 2022

26 October-11 November 2022

Call for Papers

IEEE Internet of Things Journal

Special issue on Towards Intelligence for Space-Air-Ground Integrated Internet of Things

Submission Deadline: 1 November 2022

Special issue on Smart Blockchain for IoT Trust, Security and Privacy

Submission Deadline: 15 November 2022

Past Issues

September 2022

July 2022

March 2022

January 2022

November 2021

September 2021

July 2021

May 2021

March 2021

January 2021

November 2020

July 2020

May 2020

March 2020

January 2020

November 2019

September 2019

July 2019

May 2019

March 2019

January 2019

November 2018

September 2018

July 2018

May 2018

March 2018

January 2018

November 2017

September 2017

July 2017

May 2017

March 2017

January 2017

November 2016

September 2016

July 2016

May 2016

March 2016

January 2016

November 2015

September 2015

July 2015

May 2015

March 2015

January 2015

November 2014

September 2014

Comments

2017-09-15 @ 4:10 PM by Strnadl, Christoph

I am not sure if I miss something but I don't see how this architecture is different from the well-known Lambda architecture. We have been applying this architecture for years now, but surely it helps to be re-inforced that it actually works in practice.

Secondly, in practically all our IoT projects, very soon clients discover they need a back-channel from the real-time analytics component (which, incidentally, is not even shown in the diagram) back to the device e.g, to change some device parameters. Unfortunately, however, this omission practically stymies the application of this architecture to (I am exaggerating) trivial use cases where you get some alerts on real-time data BUT no action at the device level is needed. IN MANY projects -- especially if IoT maturity grows -- however, getting back to the devices is where very large business benefits are buried.

Additionally, you need a channel from the real-time analytics to the corporate/enterprise IT systems as well -- unless you want to limit alerting and actions to dashboarding or user-mediated activities. This, of course, no longer is trivial and certainly needs a full-fledged integration (+ process automation) platform. However, again, the end-to-end process integration from devices to corporate IT systems (think of ERP or CRM) and back to devices is where large business values can be uncovered.

And, actually, in real world projects one needs this back channel basically as step 0 (yes, the very first step) for device management. This, of course, is not shown at all in this architecture -- which, for all practical purposes, severly limits the application of it.

So, if you limit your use case to just (real-time) analytics -- AND REALLY NOTHING MORE -- then this architecture template may indeed serve you well.

IF, however, you need ANYTHING OUTSIDE this (artifically, in my practical view) limited use case, then a FULLER ARCHITECTURE is required. And this will be the case for many (if not all) integrated real-world use cases.

By the way, these much (broader) architectures are readily available.

P.S. The authors also mention "fog" and "edge" computing. Unfortunately, we are seeing an increasing demand for "fog analytics" and "edge analytics" -- so even from a pure (real-time) analytical point of view, this architecture definitely is not future-proof.