EWSN Dependability Competition: Experiences and Lessons Learned

Low-power wireless sensor networks are an important underlying infrastructure for the Internet of Things (IoT) and are increasingly used in application domains imposing strict dependability requirements on network performance, such as smart production, smart cities, or connected cars. These application domains are safety-critical: any failure in meeting application-specific requirements and in conveying information in a dependable (i.e., reliable, timely, and energy-efficient) manner may result in high costs and physical damage to people or things.

A key challenge in achieving dependable low-power wireless IoT communications is the increasing congestion of the freely available ISM bands. Radio interference from surrounding wireless devices and electrical appliances often impairs packet reception, reduces throughput, leads to high latencies, and causes retransmissions that may accelerate the depletion of batteries.

For more than a decade, academia and industry have proposed a large number of protocols and RF mitigation techniques to increase the dependability of low-power wireless IoT communications in the presence of radio interference. However, these solutions have rarely been benchmarked under the exact same settings and with a focus on the end-to-end performance. As a result, the research community is still disputing on which communication technique is the most dependable for a given application scenario (e.g., stateful routing vs. stateless flooding).

To shed light in this domain, we have started a competition to quantitatively compare the dependability of state-of-the-art wireless sensing systems in environments rich with radio interference. The first two editions of the competition, co-located with the International Conference on Embedded Wireless Systems and Networks (EWSN), have focused on a scenario emulating the operation of a wireless sensor network monitoring discrete events [1].

Benchmarking end-to-end system performance under repeatable conditions

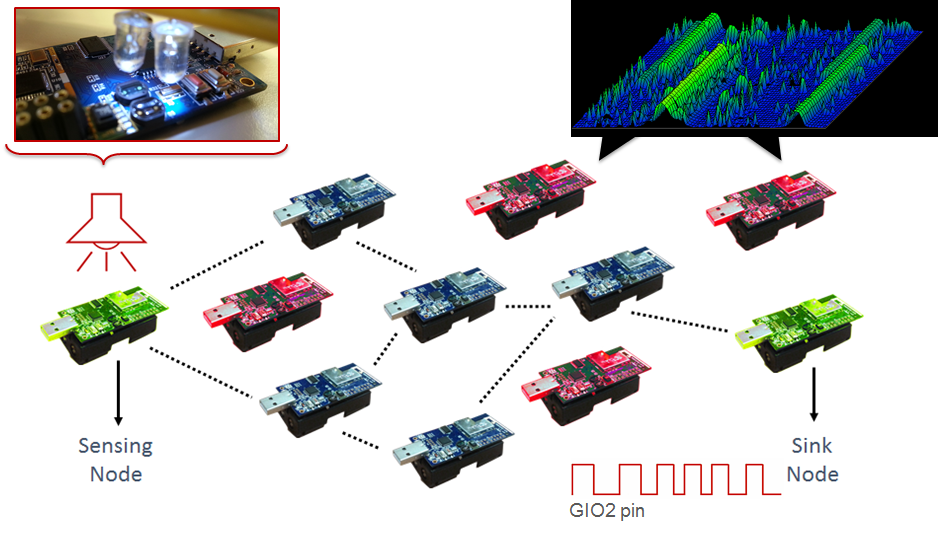

The scenario employed in the competition is sketched in Figure 1. A sensor node is placed in proximity of a light source and monitors its brightness. As soon as a sudden variation in the lighting condition is detected, this sensor node immediately reports this information over a multi-hop wireless network to a central unit. The competing solutions are evaluated based on the end-to-end latency (i.e., the delay between the event is generated and received by a sink node), on reliability (i.e., on the number of events correctly reported to the sink node), and on energy consumption (i.e., the sum of the energy consumed by all nodes in the network). Controlled radio interference is generated in the competition area using JamLab [2], and the same patterns are repeated for each contestant.

Figure 1: The competition scenario emulates an industrial control application in which a single sensor observes and reports events over a multi-hop wireless network to a central unit.

Figure 1: The competition scenario emulates an industrial control application in which a single sensor observes and reports events over a multi-hop wireless network to a central unit.

The competition scenario differs from existing protocol comparisons found in the literature in three ways. First, all competing solutions are tested on the same hardware and on the exact same settings. Second, the evaluation focuses on end-to-end metrics and on whether the application goal is fulfilled, as opposed to protocol-specific low-level metrics such as the number of parent switches or the path length in hops, which do not allow drawing any conclusion on the end-to-end dependability of a system. Third, to increase fairness and realism, we allow the developers of the competing solutions to interact with the benchmarking infrastructure and to optimize the protocol parameters for the specific application scenario at hand. As tiny differences in the parametrization may lead to quite different results (traditional comparisons rely on the protocols’ default settings), and as the spirit of the competition spurs all contestants into pushing the performance of their solution to the edge, we can understand how suitable state-of-the-art IoT protocols are for the application scenario at hand.

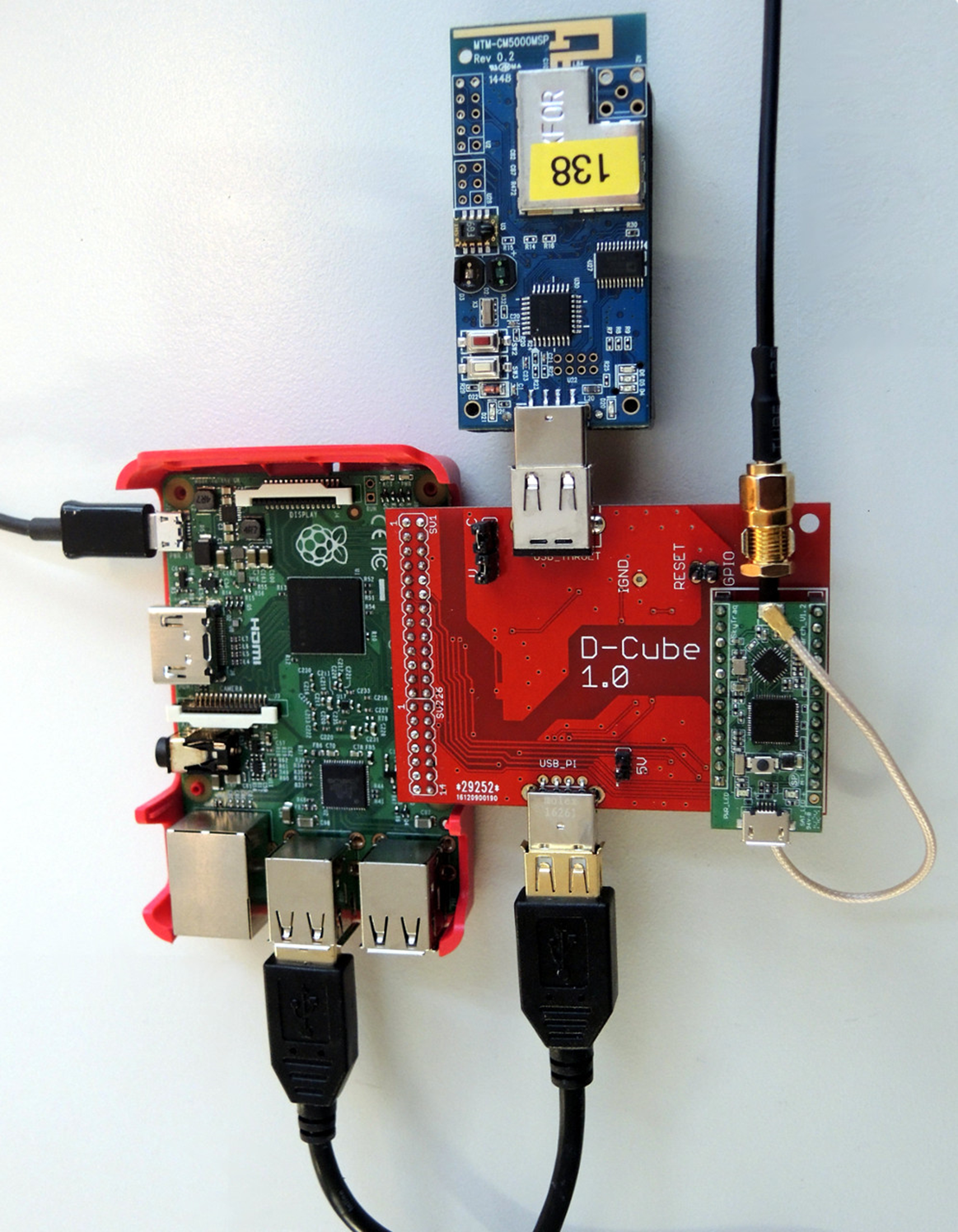

To create our benchmark scenario with minimal costs (i.e., to accurately profile the power consumption of a device, measure end-to-end latency at sub-microsecond accuracy, and detect the occurrence of specific events), we have designed D-Cube [1], a low-cost tool built on top of a Raspberry Pi 3, as shown in Figure 2. D-Cube measures the desired dependability metrics and graphically depicts their evolution in real-time using InfluxDB and Grafana, hence allowing the contestants to monitor the performance of their protocols in real-time and to parametrize them accordingly [1].

Figure 2: D-Cube, the open-source profiling tool used to benchmark the protocols. Further info can be found in [1].

New-generation protocols are reliable and timely

The results of both the EWSN 2016 competition that took place in Graz, Austria (http://www.iti.tugraz.at/EWSN2016/cms/index.php?id=8), as well as the EWSN 2017 edition held in Uppsala, Sweden (http://www.ewsn2017.org/dependability-competition.html) have shown that new-generation IoT protocols can satisfy the strict dependability requirements of safety-critical systems monitoring discrete events. The top solutions could successfully capture and deliver events even in the presence of high interference, while pushing the end-to-end delay well below 100 milliseconds in networks with a diameter of up to seven hops. Key difference between the two editions was the density of the nodes in the network: in the first edition, the network was very dense, with more than 45 nodes deployed in an area of 150 m2. In the second edition, instead, the network was very sparse, and between three and seven hops were required to reliably convey information from the sensing to the sink node.

Flooding with hopping is the reference solution for scenarios with single nodes reporting events

In both dense and sparse network settings, all top solutions were the ones employing frequency diversity, showing that the shift towards frequency hopping protocols such as IEEE 802.15.4e, BLE, and WirelessHART is a necessity, especially in safety-critical settings.

Remarkably, almost all top solutions are based on Glossy [3] and carry out network flooding over multiple channels. Flooding-based solutions have achieved the best performance in both dense and sparse settings, as well as shown that stateless flooding is superior to stateful routing in scenarios in which single nodes need to quickly report discrete events towards a central unit. The energy efficiency of flooding approaches was the highest across all solutions and superior to standard routing approaches. This hints that flooding can guarantee low latencies without incurring a significantly higher energy expenditure, an argument that has long been debated in the research community. Flooding-based solutions are also gaining an increasing interest from the industrial community, as shown by the fact that several of the competing solutions based on flooding were produced by companies such as ABB, Airbus, Infineon, and Toshiba.

Implications for existing IoT applications

While IoT applications have vastly different requirements, we could demonstrate that for settings in which a discrete event has to be transmitted reliably to a central station, stateless flooding with channel hopping is clearly the best solution. There is quite a number of IoT applications that match this description, and include home automation systems making use of smoke detectors or intrusion detection sensors, body worn sensors used for elderly monitoring or health-care applications, as well as simple industrial monitoring systems.

What about multiple sources of traffic?

We plan to improve upon the competition over the next years to evaluate solutions for even more challenging tasks and scenarios. As we have now covered both dense and sparse networks in which discrete events need to be quickly reported to a central unit, we plan to introduce several sources of traffic as well as feedback loop between nodes. We are also considering the addition of boundaries on the consumed energy per node in order to emulate battery depletion, or on the end-to-end latency in order to evaluate the determinism of the competing solutions.

References

[1] M. Schuß, C.A. Boano, M. Weber, and K. Römer. A Competition to Push the Dependability of Low-Power Wireless Protocols to the Edge. In Proceedings of the 14th International Conference on Embedded Wireless Systems and Networks (EWSN). Uppsala, Sweden. February 2017.

[2] C.A. Boano, T. Voigt, C. Noda, K. Römer, and M.A. Zúñiga. JamLab: Augmenting Sensornet Testbeds with Realistic and Controlled Interference Generation. In Proceedings of the 10th International Conference on Information Processing in Sensor Networks (IPSN). Chicago, IL, USA. April 2011.

[3] F. Ferrari, M. Zimmerling, L. Thiele, and O. Saukh. Efficient network flooding and time synchronization with Glossy. In Proceedings of the 10th International Conference on Information Processing in Sensor Networks (IPSN). Chicago, IL, USA. April 2011.

Carlo Alberto Boano (IEEE member since 2009) is an assistant professor at the Institute for Technical Informatics of Graz University of Technology, Austria. He received a doctoral degree sub-auspiciis praesidentis from Graz University of Technology in 2014 with a thesis on dependable wireless sensor networks, and holds a double Master degree from Politecnico di Torino, Italy, and KTH Stockholm, Sweden. His research interests encompass the design of dependable networked embedded systems, with emphasis on the energy-efficiency and reliability of low-power wireless communications, as well as on the robustness of networking protocols against environmental influences.

Carlo Alberto Boano (IEEE member since 2009) is an assistant professor at the Institute for Technical Informatics of Graz University of Technology, Austria. He received a doctoral degree sub-auspiciis praesidentis from Graz University of Technology in 2014 with a thesis on dependable wireless sensor networks, and holds a double Master degree from Politecnico di Torino, Italy, and KTH Stockholm, Sweden. His research interests encompass the design of dependable networked embedded systems, with emphasis on the energy-efficiency and reliability of low-power wireless communications, as well as on the robustness of networking protocols against environmental influences.

Markus Schuß is a PhD student at the Institute for Technical Informatics of Graz University of Technology, Austria. He received his M.Sc degree in Information and Computer Engineering from Graz University of Technology in 2016. As part of his Master’s thesis, he developed a simulation engine based on SystemC-AMS for systems modelled in UML within the semiconductor and automotive sector. His research interests encompass the development of testbeds and benchmarking infrastructures, as well as the evaluation of the robustness and energy-efficiency of wireless communication protocols used in the Internet of Things and in industrial automation.

Markus Schuß is a PhD student at the Institute for Technical Informatics of Graz University of Technology, Austria. He received his M.Sc degree in Information and Computer Engineering from Graz University of Technology in 2016. As part of his Master’s thesis, he developed a simulation engine based on SystemC-AMS for systems modelled in UML within the semiconductor and automotive sector. His research interests encompass the development of testbeds and benchmarking infrastructures, as well as the evaluation of the robustness and energy-efficiency of wireless communication protocols used in the Internet of Things and in industrial automation.

Kay Römeris professor at and director of the Institute for Technical Informatics at Graz University of Technology, Austria. Before he held positions of Professor at the University of Lübeck in Germany, and senior researcher at ETH Zürich in Switzerland. Prof. Römer obtained his Doctorate in computer science from ETH Zürich in 2005 with a thesis on wireless sensor networks. His research interests encompass wireless networking, fundamental services, operating systems, programming models, dependability, and deployment methodology of networked embedded systems, in particular Internet of Things, Cyber-Physical Systems, and sensor networks.

Kay Römeris professor at and director of the Institute for Technical Informatics at Graz University of Technology, Austria. Before he held positions of Professor at the University of Lübeck in Germany, and senior researcher at ETH Zürich in Switzerland. Prof. Römer obtained his Doctorate in computer science from ETH Zürich in 2005 with a thesis on wireless sensor networks. His research interests encompass wireless networking, fundamental services, operating systems, programming models, dependability, and deployment methodology of networked embedded systems, in particular Internet of Things, Cyber-Physical Systems, and sensor networks.

Sign Up for IoT Technical Community Updates

Calendar of Events

IEEE 8th World Forum on Internet of Things (WF-IoT) 2022

26 October-11 November 2022

Call for Papers

IEEE Internet of Things Journal

Special issue on Towards Intelligence for Space-Air-Ground Integrated Internet of Things

Submission Deadline: 1 November 2022

Special issue on Smart Blockchain for IoT Trust, Security and Privacy

Submission Deadline: 15 November 2022

Past Issues

September 2022

July 2022

March 2022

January 2022

November 2021

September 2021

July 2021

May 2021

March 2021

January 2021

November 2020

July 2020

May 2020

March 2020

January 2020

November 2019

September 2019

July 2019

May 2019

March 2019

January 2019

November 2018

September 2018

July 2018

May 2018

March 2018

January 2018

November 2017

September 2017

July 2017

May 2017

March 2017

January 2017

November 2016

September 2016

July 2016

May 2016

March 2016

January 2016

November 2015

September 2015

July 2015

May 2015

March 2015

January 2015

November 2014

September 2014